Searching Large Database Tables

Recently I ran into a little problem: I needed to make a simple Web UI allowing users to search a database table containing a copy of the Postfix maillog for quarantined mail addressed to them. Simple, right? It would have been if the table in question did not contain over a hundred million records. The table is continuously updated by the rsyslog service with maillog entries received from several Postfix servers.

The search query – originally done with the Log Analyser – took almost ten minutes on an 8-core CentOS 6.4 64-bit VM with 16GB of RAM. The hundred million records in the table represent only the past four weeks of maillog entries. The requirement was to keep up to one year of records, so do the math. And, yes, I am using MySQL. The options considered included (obviously) a bigger server, an Oracle database, table partitioning, database parameter tuning, a striped LUN running on many SSDs, etc.

Looking at the server load while executing the search query, I noticed something odd: after initial heavy filesystem activity, most of the wait time was due to user CPU time. So the filesystem performance was not the issue and, apparently, neither was the memory. It was the CPU: all that sorting and searching done by a single CPU core, while the seven remaining cores twiddled their little thumbs. Table partitioning – a feature frequently used when dealing with particularly large tables – is intended primarily to speed up searching subsets of data.

This would have been useful if, say, I needed to search only the past two weeks worth of data. Unfortunately, I need to search the entire table and so partitioning does me little good. What I really needed is to split up the table into a bunch of smaller tables and then search each one via separate SQL query, using separate CPUs, dumping the output into a single file or table. And so the solution seemed obvious: launch a bunch of SQL queries in parallel – each for a different subset of table data.

The fastest way to split up a table is by the auto-increment index field. You just subtract the smallest value from the largest and divide it by te number of available CPU cores. So, what happens when you do this? There is the same initial explosion of filesystem activity, as the data is being read from disk, an then every CPU core goes to a hundred percent searching its section of the hay stack. What you get is a more complete use of available system resources.

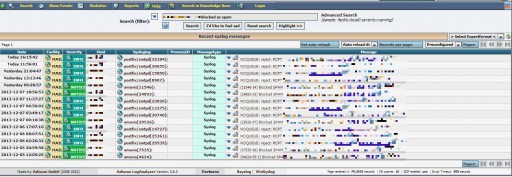

Here are some screenshots of the same search query being run against a table of over seventeen million records using Log Analyser and a simple parallel SQL query script I put together. I also included a couple of screenshots of nmon, showing system activity during query execution.

As you can see, a single CPU is doing all the work and disk activity is hardly noticeable.

Unsurprisingly, I had to wait almost 80 seconds for the results. Here’ the question: if you split up the load among eight CPUs, would you get the result eight times faster? Probably not, but, at this point, any improvement would be welcome.

Here’s a screenshot of nmon while running my parallel SQL query script. You can see the difference in system activity.

Some CPU wait time suggests that now a faster filesystem would be useful, so I may still go with those SSDs. As for the results…

Eleven seconds vs eighty. Not quite an eight-fold improvement, but close enough. Moral of the story: if you spend money on a nice big server – use as much of it as you can.

Below is an example of the script. Obviously, you will need to do some tweaking, but it’s nothing complicated.

#!/bin/ksh

# igor@krazyworks.com

# 2013-12-09

# MySQL parallel SQL query script

search_keyword="$1"

if [ -z "${search_keyword}" ] ; then exit 1 ; fi

configure() {

db_user="username"

db_pass="password"

db_name="database_name"

tbl_name="table_name"

ai_field="autoincrement_index_column"

MYSQL="/usr/bin/mysql --batch --skip-column-names --max_allowed_packet=100M -u${db_user} -p${db_pass} ${db_name} -e"

tmpdir="/tmp"

tmpfilename="mysql_parallel.tmp"

tmpfile="${tmpdir}/${tmpfilename}"

if [ -f "${tmpfile}" ] ; then /bin/rm -f "${tmpfile}" ; fi

outdir="/tmp"

outfilename="mysql_parallel.csv"

outfile="${outdir}/${outfilename}"

if [ -f "${outfile}" ] ; then /bin/rm -f "${outfile}" ; fi

max_connections=$(grep ^max_connections /etc/my.cnf | head -1 | awk -F'=' '{print $NF/4}')

cur_connections=$(ps -ef | grep -c [m]ysql)

cpu_cores=$(/usr/bin/nproc)

split_intervals=$(echo "scale=0;(${max_connections}-${cur_connections}) / $cpu_cores"|bc -l)

}

record_range() {

typeset -A array

i=0

seq $first $increment $(( last + increment )) | while read line

do

array[$i]=$line

(( i = i + 1 ))

done

array_count=$(echo "scale=0;`echo ${#array[*]}`-1"|bc -l)

i=1

from=$(echo $first)

to=$(echo ${array[$i]})

echo "$from@$to"

while [ $i -lt $array_count ]

do

from=$(echo "scale=0;`echo ${array[$i]}`+1"|bc -l)

(( i = i + 1 ))

to=$(echo ${array[$i]})

echo "$from@$to"

done

}

do_select() {

echo -n "Finding first ${ai_field}: "

first=$($MYSQL "SELECT ${ai_field} FROM ${tbl_name} ORDER BY ${ai_field} ASC LIMIT 1")

echo "${first}"

echo -n "Finding last ${ai_field}: "

last=$($MYSQL "SELECT ${ai_field} FROM ${tbl_name} ORDER BY ${ai_field} DESC LIMIT 1")

echo "${last}"

echo -n "Calculating ${ai_field} increment: "

increment=$(echo "scale=0;(${last}-${first})/${split_intervals}"|bc -l)

echo "${increment} records"

record_range | while read line

do

from=$(echo "${line}" | awk -F'@' '{print $1}')

to=$(echo "${line}" | awk -F'@' '{print $2}')

$MYSQL "SELECT column1,column2,column2 FROM $tbl_name WHERE ${ai_field} >= '${from}' AND ${ai_field} <= '${to}' AND column_whatever LIKE '%${search_keyword}%';" >> "${outfile}" & disown

done

}

configure

do_select

while [ `ps -ef | grep -c "${search_keyword}"` -gt 2 ]

do

sleep 1

done

cat << EOF

---------------------------------------------------------------------------------------

Done in $SECONDS seconds

Output in `hostname | awk -F'.' '{print $1}'`:${outfile} with `wc -l ${outfile} | awk '{print $1}'` results.

---------------------------------------------------------------------------------------

Detailed Results

---------------------------------------------------------------------------------------

`cat "${outfile}"`

---------------------------------------------------------------------------------------

EOF

![Plundering Facebook Photo Albums wyryr1sn52gntzn65v4fpz5lc6v[1]](https://i2.wp.com/www.krazyworks.com/wp-content/uploads/2021/08/wyryr1sn52gntzn65v4fpz5lc6v1-80x80.jpg)

![Scraping a Web Page in Bash clfud46mxf2t5zph2l04zlpgl1d[1]](https://i2.wp.com/www.krazyworks.com/wp-content/uploads/2021/08/clfud46mxf2t5zph2l04zlpgl1d1-80x80.jpg)

![Processing Videos with ffmpeg and Lightroom DSCF5361[1]](https://i1.wp.com/www.krazyworks.com/wp-content/uploads/2020/10/DSCF53611-80x80.jpg)

![Searching Twitter Cat-Devouring-A-Bird-Pablo-Picasso[1]](https://i0.wp.com/www.krazyworks.com/wp-content/uploads/2020/10/Cat-Devouring-A-Bird-Pablo-Picasso1-80x80.jpg)

![Generating Random Text Files for Testing 91ebozy1e3b138waujy28agrngy[1]](https://i2.wp.com/www.krazyworks.com/wp-content/uploads/2021/08/91ebozy1e3b138waujy28agrngy1-80x80.jpg)

![Finding Cron Jobs iwoau71okwxqh4jv3k4ook32qps[1]](https://i2.wp.com/www.krazyworks.com/wp-content/uploads/2021/08/iwoau71okwxqh4jv3k4ook32qps1-80x80.jpg)

![Understanding Memory Utilization in Linux 5gtysva4wkc8mzc1k3e66vaycxx[1]](https://i1.wp.com/www.krazyworks.com/wp-content/uploads/2021/08/5gtysva4wkc8mzc1k3e66vaycxx1-80x80.jpg)

![Validating HTTPS Cache Peers for Squid sy2zeb1vp2h8itlxfbbylw0jrm0[1]](https://i0.wp.com/www.krazyworks.com/wp-content/uploads/2019/12/sy2zeb1vp2h8itlxfbbylw0jrm01-80x80.jpg)

![Verifying SNMP Connectivity on Multiple Hosts Photo-2019-10-07-14-39-03_0266[1]](https://i1.wp.com/www.krazyworks.com/wp-content/uploads/2019/11/Photo-2019-10-07-14-39-03_02661-80x80.jpg)

No Comment »

1 Pingbacks »

[…] light of all the awesome multi-coreness we enjoy these days. There’ve been some attempts (1, 2) to get around this unfortunate limitation: both relying on running multiple queries in the […]